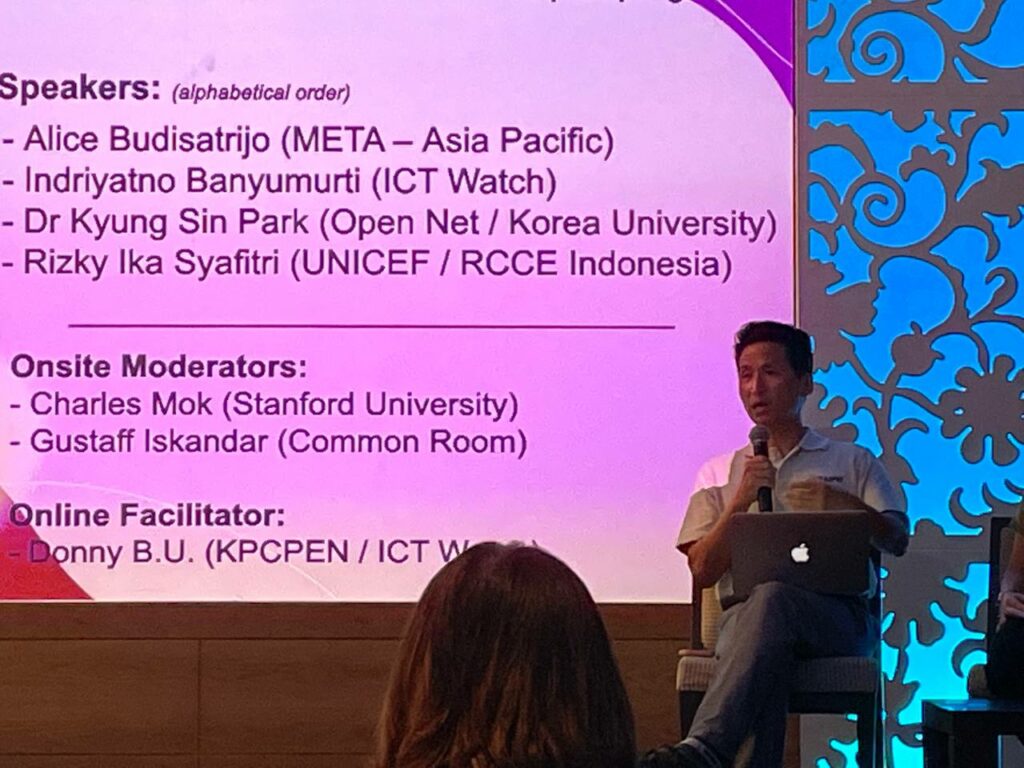

K.S. Park’s speech at the September 12 disinformation panel at APrIGF 2022 Singapore.

Event information: https://forms.for.asia/proposal/?proposalform=NjJkZWJlNDcxODVlNS8vNS8vNTUwLy8w

Penal disinformation law will stunt debates

Last month 140 victims punished for disinformation back in 1960s under military dictatorship of General Park were granted compensation – for spreading falsity about the State of Emergency and his new constitution. It took about 50 years for Korean people to realize the harms of criminally punishing disinformation. Criminal punishment of disinformation will only hamper efforts to correct falsity. This is consistent with international human rights bodies’ position. The reason that it does not work is as follows: the reason you feel the desire to protect truth about a certain issue is because there is still a debate about that issue, and criminal punishment will hamper a debate aimed at truth. If the issue is beyond a dispute like “the sun sets in the west”, the policy makers will not feel the need to criminally punish it. The policy makers have felt a strong urge to fight disinformation on COVID19 because COVID19 is so new and there needed to be so many disputes on what are good remedies, what are good prevention measures, etc.

Case in point: The Korean government did not engage in heavy criminal punishment of COVID19 disinformation because of the lesson learned from its experience with electoral disinformation.

Urgency of election is reason why criminal punishment should not be used

The policy makers want to control electoral disinformation because election takes place over a short span of time and therefore there is not enough time to correct falsity when disinformation adversely impacts the election result. However, the urgency of the election is exactly the reason why there should not be criminal punishment. Criminal punishment will discourage people from having vigorous disputes on those issues where truth and falsity is important. Less and less chance for obtaining truth.

In elections, information about corruption is important but is not a low hanging fruit. Information about corruption especially at high levels is hidden behind walls of secrecy. Truth will not come forward by itself. Any attempt to obtain truth about corruption will be based on flimsy and unstable evidence first. Usually, truth will be obtained through the process of crowd sourcing. One person witnesses a politician driving somewhere. Another person sees that politician with a gang leader. These pieces of puzzle have to be put together into a mosaic to obtain truth. If any one person disclosing a piece of the puzzle is criminally punished for having weak evidentiary information. the process of crowdsourcing wil be nipped in the bud.

Case in point: In 2007 presidential election that elected President Lee, an opposition party politician accused him of stock price manipulation. Soon he was indicted for election disinformation while the prosecutors cleared President Lee of the charge. In the meantime, the then candidate Lee was elected President. The opposition politician was later convicted of electoral disinformation in 2012 while President Lee’s conservative successor was serving the term. Fast forward to 2020, the now former President Lee was indicted and imprisoned for stock price manipulation. So this means that the criminal punishment of the opposition politician in 2012 kept the entire electorate in the dark for 10-15 years.

Three problems with regulation-based approach to disinformation: state propaganda, hate speech and pattern of diffusion

Not just criminal punishment but regulation-based approach to disinformation is a bad idea. There are three problems with regulation-based approaches. State sponsored disinformation is a much bigger problem because it comes with a brand of legitimacy (e.g., Russian government sponsored disinformation on Ukranian war). Entrusting the governments with power to regulation disinformation is like entrusting fish with a cat for safekeeping.

The other big chunk of disinformation is hate speech. People of different religions believe that other religions are spreading disinformation. But this disinformation is not harmful as it is but becomes harmful when disinformation is spread by a ruling majority targeting a minority with intent to persecute them. However, it is not truth/falsity that is harmful but the context of speech. Who is speaking, who is being targeted, and what is the power relationship between the two. Even truthful information commandeered for hate speech can be harmful. Even the most illogical falsity will be believed by majority mobs ready to attack minorities. Regulation-based approach always comes through a binary approach on whether something is true or false and blinds people about the real source of harm in disinformation, the underlying discriminatory structure of the society. Discrimination cannot be remedied or accessed by regulatory approaches to disinformation.

Third, people are concerned not about falsity of statements but the pattern of diffusion, especially automated trolling. Who knows patterns of diffusion best? Meta and other intermediaries forming platforms. They have technology to detect the sources of automated trolling. Self-regulation is the way to go. In Korea 2 years ago, one online influencer was criminally punished for automating comments on a presidential candidate when all he did was using the open API. He was not punished for the contents of the comments automated but for the pattern of diffusion. This shows that our desire is aimed at falsity or truth but pattern of diffusion.

Now, many nonprofits around the world automate petition-signing, grievance-filing, for good purposes. The government became overzealous and put someone in jail for plain online campaign. This again shows how regulation-based approach can misfire. If they were really concerned about automated trolling, Korean online intermediaries should have been entrusted with detecting that and moderating that, which NAVER actually did. Ensuing criminal punishment was unnecessary and will actually chill NAVER’s future deployment of disinformation detection technologies. If someone goes to jail for automating comments, NAVER will not be safe for not taking actions on automated comments.

0 Comments