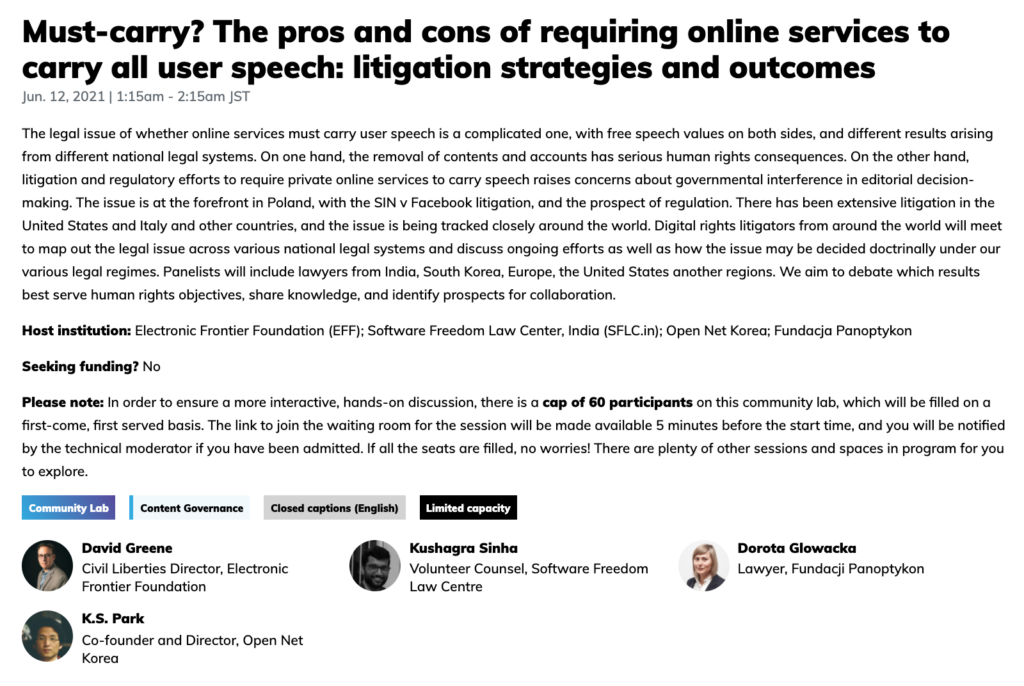

K.S. Park spoke at a Rightscon community lab session https://rightscon.summit.tc/t/2021/events/must-carry-the-pros-and-cons-of-requiring-online-services-to-carry-all-user-speech-litigation-strategies-and-outcomes-daqceEhuEQmrhidurSxQUL

In Korea, there were two, unsuccessful lawsuits filed by his group: one against NAVER for taking down the video clip of a 4 year old humming a copyrighted song, following the notice and takedown procedure under DMCA-fashioned copyright law of Korea, and the other one against DAUM for taking down an insulting comment by a progressive pundit against a conservative one, again following the defamation notice and takedown procedure under the country’s ICT law. Suits failed because the platforms were deemed to be carrying out the actions permitted by the positive laws instituting the intermediary liability safe harbor (although the copyright suit against the rightholder for bad faith takedown succeeded! Lenz in Korea).

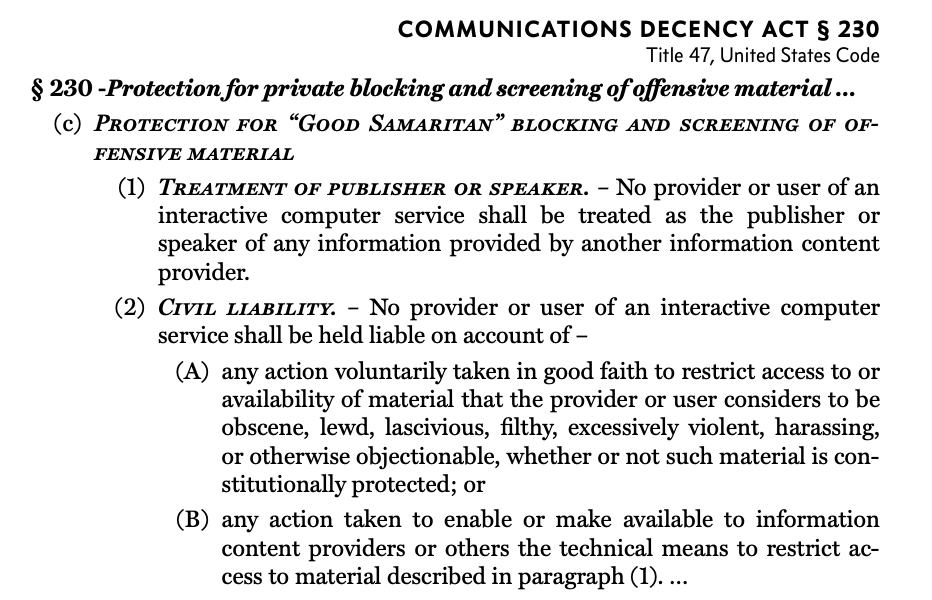

These lawsuits remind us of a dilemma that all advocates for must-carry rights must face: will it affect intermediary liability safe harbor adversely? CDA230 started out as an encouragement for self-policing: the idea is that platforms should be not liable for taking things down (paragraph 2 below) but also should not be attributed scienter (knowledge) of illegal content just because they take things down, and to strengthen this rule, CDA230 added the first paragraph immunizing platforms from the role and responsibility of a publisher. But this paragraph became more powerful and influential and ended up establishing a rule that platforms will be held immune for NOT taking contents down. Now, if the platforms are held liable FOR taking things down through these must-carry litigations, they may be fit for being attributed more control over contents, and people may start arguing that platforms should be held liable for NOT taking things down — the end of CDA 230.

There were also two successful suits: one against a online community moderator for taking down contents for merely being offensive to the majority of community members, and the other one against the Navy website for taking down contents critical of the Korean Navy’s plan to build a new military base. The first one succeeded mainly on account of the purpose of the online community i.e., socializing – which means that some offensive comments must be tolerated – mainly on a contracts-related theory, and the second one succeeded on account of the public forum nature of a government website.

Finally, there is emerging a new successful theory based on competition law. Korea’s competition authority Fair Trade Commission imposed fines on two major platforms for lowering the ranking of shopping mall contents from their competitors because the platforms were deemed to have dominance of market and that dominance was abused. Lowering the ranks is similar to taking down the content. So, maybe in the future we should add the fourth theory of must-carry litigations: competition.

*Earlier, David Greene started with three possible theories of holding platforms for taking down contents:

(1) state action : Some of the early decisions were based on the idea that internet service was made possible by public resources.

(2) “common carriage” : Increasingly, the suits focus on “common carriage” obligations.

(3) positive right of freedom of speech: SIN v. Facebook and other suits are making arguments based on positive freedom of speech, as opposed to negative freedom of speech such as “State shall NOT restrict freedom of speech.”

Both state action and common carriage are bound to fail eventually for different reasons. Platforms are completely private. Platforms are becoming more diverse in having their own voices and diverging into general purpose platforms that governments around the world are threatening to impose the obligations like a broadcaster and special purpose platforms which have their own freedom of speech profoundly define the content governance. The middle ground is fast disappearing. From free speech advocates’ point of view, positive right theory is the only hope. Poland’s new proposal for Freedom of Speech Council may have ample rooms for abuse such as impartiality and data retention but maybe we should salvage the world’s first attempt at creating a positive right for online free speech.

![[Urgent Discussion] Diagnosing Cultural and Artistic Creative Labor and Seeking Alternatives in the Age of AI Automation](https://www.opennetkorea.org/wp-content/uploads/2026/01/d89eb37157f00-819x1024-1.jpeg)

0 Comments